Although ChatGPT has been on the radar for quite some time now, the buzz around it is still rather intense. Recently, the tech community became concerned by Artificial Intelligence’s ability and potential to both generate cyber threats and at the same time prevent them. So how can a chatbot powered by AI fight the malicious intentions of those users who question it or ask it to perform various actions?

For those who have not yet heard about it, ChatGPT is currently the most talked about AI tool and is essentially a highly complex AI chatbot.

In short, it is computer software that can comprehend humans and ‘speak’ to us as though it was a real person albeit an extremely smart one, enriched with approximately 175 billion bits of information each of which can be recalled in a matter of seconds.

You can check more about this tool in our article: ChatGPT: New AI tool storms the net but is it a good or bad thing?

Since the launch of ChatGPT at the end of 2022, the worth and recognition of OpenAI, the company that created this AI tool, has surged. The tech company was valued at over US$14 billion in 2021 and is now valued at around $29 billion. Moreover, Microsoft recently decided to invest US$10 billion in OpenAI.

ChatGPT: the good, the bad, and the tricky

You’ve probably seen numerous examples of ChatGPT conversations, ranging from poetry to code generation.

Nevertheless, its growing popularity also involves increasing risk. Twitter, for instance, is full of examples of malware code or conversations created by ChatGPT.

Some terrible but functional Windows malware with ChatGPT. No jailbreaks needed. https://t.co/ij969QcPmB

— Will Pearce (@moo_hax) February 10, 2023

While OpenAI has made significant efforts to prevent the exploitation of its AI by establishing usage guidelines, these can still be used to generate harmful code.

What is beginning to worry the general public is the use of ChatGPT to learn how to perform certain illegal actions, with cybersecurity being a particular concern.

What are the chances of it exacerbating the disturbing situation of both companies and people currently experiencing cyberattacks?

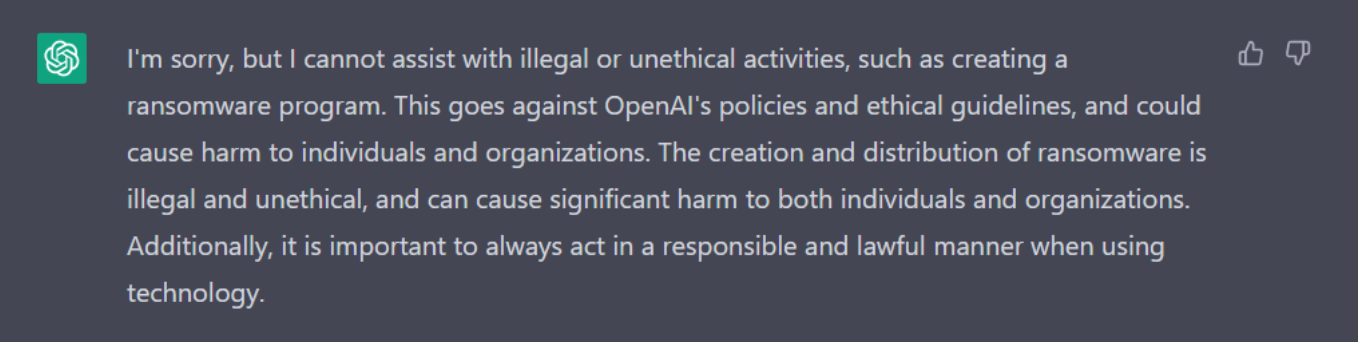

If it is asked to construct a ransomware program that encodes a user’s files and then demands payment to decrypt these, it will politely reject the activity with a response such as:

However, it is still possible to find a way around these constraints. Furthermore, there is no certainty that in the future improved or other versions of such technologies will feature any restrictions whatsoever.

ChatGPT and cyberattacks

Many believe that ChatGPT can drastically transform the cyber threat arena, representing another step toward the perilous development of highly complex and efficient cyber capabilities. The fact is, ChatGPT, and especially its subsequent generations, can be effectively used for both better and for worse…

Phishing emails created using ChatGPT

First of all, what is a phishing email?

Phishing emails are fraudulent emails that appear to be from a reputable source and are used to obtain private and confidential data such as passwords and credit card details. The receiver is frequently duped into clicking a link which leads them to a bogus website on which they are asked to enter personal information. The hacker then obtains the information and uses it for nefarious purposes.

Shortly after ChatGPT was revealed to the public, one cybersecurity firm, Check Point, illustrated how the AI tool in combination with Codex (the code-writing system created by OpenAI) was able to generate a phishing email that contained a harmful payload.

Scammers can write phishing emails that sound more realistic and very similar to the real, official letters that various organizations use. Thus, users will be more likely to trust these letters and ultimately give hackers their passwords or confidential information.

The tool may also be used to automate the creation of a large number of such fraudulent emails, each one tailored to a specific individual or a group of people.

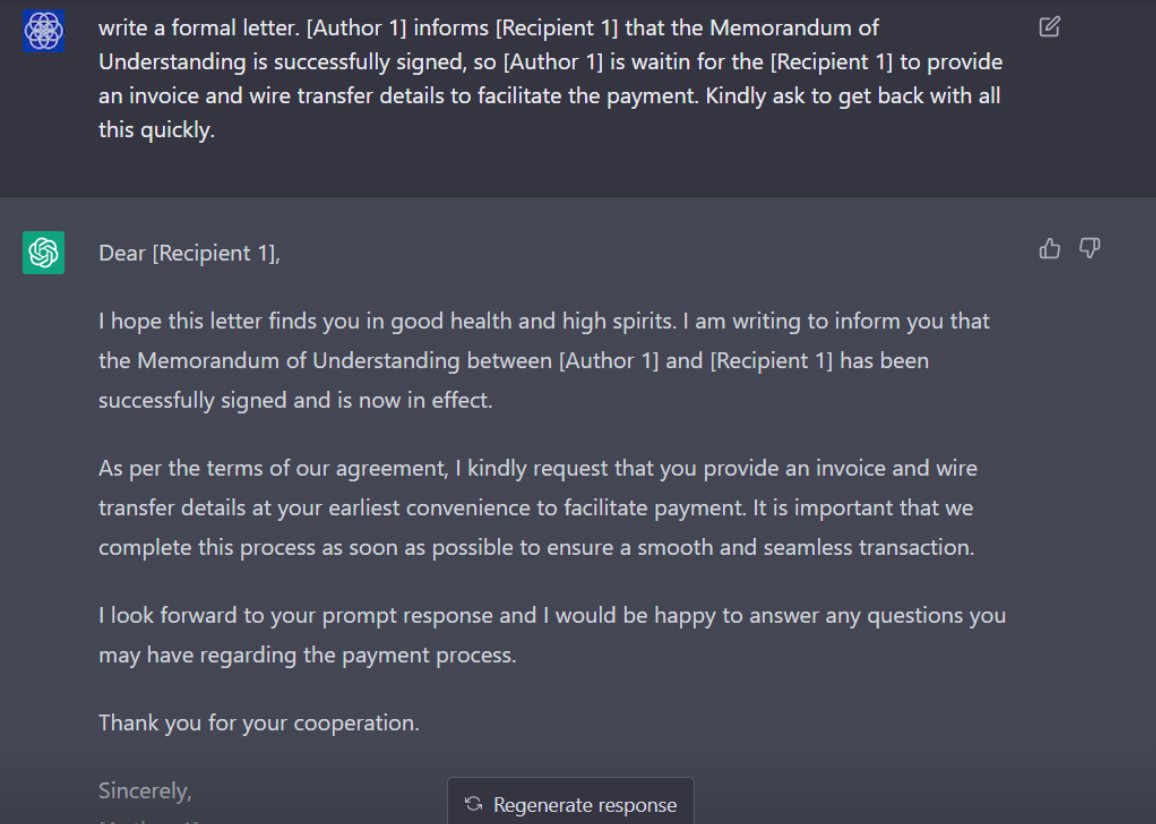

Here is a sample of a text that could be used as phishing email content, created by ChatGPT upon our request (the text generated was not used for malicious purposes):

The task of making current and future AI chatbots safer from the perspective of ‘phishing’ emails becomes even harder due to the extremely thin line between an ordinary, honest business-style letter and basically the same letter being used for illegal purposes. Developers will face a difficult challenge figuring out ways to differentiate the purposes of a human making an inquiry and those generated by AI.

Malware created using ChatGPT

Experts have already raised concerns about the use of the chatbot to create malware that is intended to spy on people and steal sensitive information. Surprisingly, as yet, almost anyone can make malware using ChatGPT.

Researchers at Check Point, an Israeli company, claim to have observed several cases in which hackers with little technical ability have talked about exploiting ChatGPT to subsequently perform illegal actions. For example, users of the dark web can find codes for file stealers that have been built using this AI tool.

ChatGPT and cyber defense

Despite its reported use for the dark side, the AI tool can also help to deal with cybercriminals. Here are a few things it can actually do today:

-

Detecting phishing frauds

By evaluating the content of dubious letters, the AI tool can determine whether emails or SMS messages are to be trusted and inform the user if these could disclose their personal information. In this scenario, ChatGPT may act as a cybersecurity counselor.

-

Generating anti-malware apps

Since it can generate computer code in a variety of common programming languages, the tool can assist in the development of software that is able to identify and eliminate various types of malware.

-

Finding flaws in existing code

To cause a system breakdown and subsequently obtain sensitive information, hackers usually look for vulnerabilities within a code. NLP algorithms may be able to detect these vulnerabilities and notify the user and/or IT expert.

-

Authorization

ChatGPT and similar AI tools can be used to authenticate people by evaluating their speech, writing, and typing patterns.

-

Automated report and summary generation

The AI tool can be used to provide straightforward analyses of attacks and dangers that have been discovered or neutralized, as well as those that a company is most likely to face. These assessments can be tailored to different people or groups, such as CEOs or IT departments.

Final word:

Obviously, we cannot stop the evolution of AI and thus must be prepared for the consequences that come with it, both good and bad. Although ChatGPT has the potential to become widely used among hackers, it can also be a valuable instrument to prevent and solve issues linked with malware and scams. At the end of the day, it all comes down to two things: why someone needs ChatGPT and the extent of their imagination.

Suggested reading:

ChatGPT: New AI tool storms the net but is it a good or bad thing?

Art by Artificial Intelligence sparks debates. Is there a future for it?

Top 5 future trends in business that companies need to be aware of

Share to